Last week I gave a talk at SXSW 2013 in Austin about some of the things I’m thinking about these days—including quite a few that I’ve never talked publicly about before. Here’s a video, and a slightly edited transcript:

Video streaming by Ustream

Well, this is a pretty exciting time for me. Because it turns out that a whole bunch of things that I’ve been working on for more than 30 years are all finally converging, in a very nice way. And what I’d like to do here today is tell you a bit about that, and about some things I’ve figured out recently—and about what it all means for our future.

This is going to be a bit of a wild talk in some ways. It’s going to go from pretty intellectual stuff about basic science and so on, to some really practical technology developments, with a few sneak peeks at things I’ve never shown before.

Let’s start from some science. And you know, a lot of what I’ll say today connects back to what I thought at first was a small discovery that I made about 30 years ago. Let me tell you the story.

I started out at a pretty young age as a physicist. Diligently doing physics pretty much the way it had been done for 300 years. Starting from this-or-that equation, and then doing the math to figure out predictions from it. That worked pretty well in some cases. But there were too many cases where it just didn’t work. So I got to wondering whether there might be some alternative; a different approach.

At the time I’d been using computers as practical tools for quite a while—and I’d even created a big software system that was a forerunner of Mathematica. And what I gradually began to think was that actually computers—and computation—weren’t just useful tools; they were actually the main event. And that one could use them to generalize how one does science: to think not just in terms of math and equations, but in terms of arbitrary computations and programs.

So, OK, what kind of programs might nature use? Given how complicated the things we see in nature are, we might think the programs it’s running must be really complicated. Maybe thousands or millions of lines of code. Like programs we write to do things.

But I thought: let’s start simple. Let’s find out what happens with tiny programs—maybe a line or two of code long. And let’s find out what those do. So I decided to do an experiment. Just set up programs like that, and run them. Here’s one of the ones I started with. It’s called a cellular automaton. It consists of a line of cells, each one either black or not. And it runs down the page computing the new color of each cell using the little rule at the bottom there.

![Rule 254 Rule 254]()

OK, so there’s a simple program, and it does something simple. But let’s point our computational telescope out into the computational universe and just look at all simple programs that work like the one here.

![Cellular automata rules Cellular automata rules]()

Well, we see a bunch of things going on. Often pretty simple. A repeating pattern. Sometimes a fractal. But you don’t have to go far before you see much stranger stuff.

This is a program I call “rule 30“. What’s it doing? Let’s run it a little longer.

![Rule 30 Rule 30]()

That’s pretty complicated. And if we just saw this somewhere out there, we’d probably figure it was pretty hard to make. But actually, it all comes just from that tiny program at the bottom. That’s it. And when I first saw this, it was my sort of little modern “Galileo moment”. I’d seen something through my computational telescope that eventually made me change my whole world view. And made me realize that computation—even as done by a tiny program like the one here—is vastly more powerful and important than I’d ever imagined.

![Cellular automata Cellular automata]()

Well, I’ve spent the past few decades working through the consequences of this. And it’s led me to build a new kind of science, to create all sorts of practical technology, and to make me think about almost everything in a different way. I published a big book about the science about ten years ago. And at the time when the book came out, there was a quite a bit of “paradigm shift turbulence“. But looking back it’s really nice to see how well the science has taken root.

![Stephen Wolfram—A New Kind of Science Stephen Wolfram—A New Kind of Science]()

![Academic papers making use of NKS Academic papers making use of NKS]()

And for example there are models based on my kinds of simple programs showing up everywhere. After 300 years of being dominated by Newton-style equations and math, the frontiers are definitely now going to simple programs and the new kind of science.

But there’s still one ultimate app out there to be done: to figure out the fundamental theory of physics—to figure out how our whole universe works. It’s kind of tantalizing. We see these very simple programs, with very complex behavior.

![Cellular automaton Cellular automaton]()

It makes one think that maybe there’s a simple program for our whole universe. And that even though physics seems to involve more and more complicated equations, that somewhere underneath it all there might just be a tiny little program. We don’t know if things work that way. But if out there in the computational universe of possible programs, the program for our universe is just sitting there waiting to be found, it seems embarrassing not to be looking for it.

Now if there is indeed a simple program for our universe, it’s sort of inevitable that it has to operate kind of underneath our standard notions like space and time and so on. Maybe it’s a little like this.

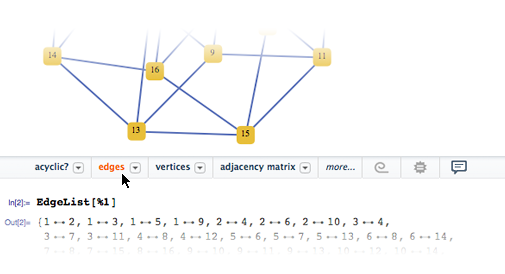

![Network Network]()

A giant network of nodes, that make up space a bit like molecules make up the air in this room. Well, you can start just trying possible programs that create such things. Each one is in a sense a candidate universe.

![Collection of universes Collection of universes]()

And when you do this, you can pretty quickly say most of them can’t be our universe. Time stops after an instant. There are an infinite number of dimensions. There can’t be particles or matter. Or other pathologies.

But what surprised me is that you don’t have to go very far in this universe of possible universes before you start finding ones that are very plausible. And that for example seem like they’ll show the standard laws of gravity, and even some features of quantum mechanics. At some level it turns out to be irreducibly hard to work out what some of these candidate universes will do. But it’s quite possible that already caught in our net is the actual program for our universe. The whole thing. All of reality.

Well, if you’d asked me a few years ago what I thought I’d be doing now, I’d probably have said “hunting for our universe”. But fortunately or unfortunately, I got seriously sidetracked. Because I realized that once one starts to understand the idea of computation, there’s just an incredible amount of technology one can build—that’s to me quite fascinating, and that I think is also pretty important for the world. And in fact, right off the bat, there’s a whole new methodology one can use for creating technology.

I mean, we’re used to doing traditional engineering—where we build things up step by step. But out there in the computational universe, we now know that there are all these programs lying around that already do amazing things. So all we have to do is to go out and mine them, and find ones that fit whatever technological purpose we’re trying to achieve.

And actually we’ve been using this kind of automated algorithm discovery for quite some time now. By now Mathematica and Wolfram|Alpha are full of algorithms and programs that no human would ever have come up with, but were just found by systematically searching the computational universe. There’s a lot that can be done like this. Not just for algorithms, but for art, like this, and for physical structures and devices too.

![WolframTones WolframTones]()

Here’s an important point that comes from the basic science. 75 years ago Alan Turing gave us the idea of universal computation. Which is what showed that software was possible, and eventually launched the whole computer revolution. Well, from the science I’ve done comes what I call the Principle of Computational Equivalence. Which among other things implies that not only are universal computers possible; they’re actually really common out there in the computational universe. Like this is the simplest cellular automaton we know is a universal computer—with that tiny little rule at the bottom there.

![Rule 110 Rule 110]()

And from a very successful piece of crowdscience that we did a few years ago, we know this is the simplest possible universal Turing machine.

![The Wolfram 2,3 Turing Machine Research Prize The Wolfram 2,3 Turing Machine Research Prize]()

Tiny things. That we can reasonably expect exist all over the natural world. But that are computationally just as powerful as any computer we can build, or any brain, for example. Which explains, by the way, why so much of nature seems so hard for us to decode.

And actually, this starts to get at some big old questions. Like free will. Or like the nature of intelligence. And one of the things that comes out of the Principle of Computational Equivalence is that there really can’t be something special that is intelligence—it’s all just computation. And that has important consequences for thinking about extraterrestrial intelligence. And also for thinking about artificial intelligence.

For me it was this philosophical breakthrough that led to a very practical piece of technology: Wolfram|Alpha. Ever since I was kid I’d been interested in seeing how to take as much of the knowledge that’s been accumulated in our civilization as possible and make it computable. Somehow make it so that if there’s a question that can be answered on the basis of this knowledge, it can be done automatically.

For years I thought that doing that would require building something like a brain. And every decade or so I would ask myself if it was time yet, and I would conclude that it was just too hard. But finally from the Principle of Computational Equivalence I realized that, no, it all had to be doable just with computation. And that’s how I came to start building Wolfram|Alpha.

I hope you’ve mostly seen Wolfram|Alpha—on the web, in Siri, in apps, or wherever.

![life of pi box office vs die hard life of pi box office vs die hard]()

The idea is: you ask a question, in natural language, and Wolfram|Alpha tries to compute the answer, and generate a report, using knowledge that it has. At some level, this is an insanely difficult thing to make work. And if we hadn’t managed to do it, I might have thought it was pretty much impossible.

First, you’ve got to get all that data, on all sorts of things in the world. And no, you can’t just forage it from the web. You have to actually go interact with all the primary sources. Really understand the data, with actual human experts. And curate it to the point where it can reliably be used to compute from. And by now I think we’ve got more bytes of raw data inside Wolfram|Alpha than there is meaningful text content on the whole web.

But that’s only the beginning. Most questions people have aren’t answered just by retrieving a piece of data. They need some kind of computation. And for that we’ve had to take all those methods and models and algorithms that come from science and engineering and financial analysis and whatever and implement them. And by now it’s more than ten million lines of very high-level Mathematica code.

So we can compute lots of things. But now we’ve got to know what to compute. And the only realistic way for humans to interface with something this broad is through humans’ natural language. It’s not just keywords; it’s actual pieces of structured language, written or spoken. And understanding that stuff is a classic hard problem.

But we have two secret weapons. First, a bunch of methods from my new kind of science. And second, actual underlying knowledge, a bit like us humans have, that lets us decode and disambiguate.

Over the 3 years since Wolfram|Alpha launched I’m pleased at how far we’ve managed to get. It’s hard work, but now more than 90% of the queries that come to our website we can completely understand. We’ve really cracked the natural language problem, at least for these small snippets.

So once we’ve understood the input, what do we do? Well, what we’ve found is that people almost never want just one answer—42 or whatever. They want a whole custom report built for them. And we’ve developed a methodology now for automatically figuring out what information to present, and how to present it.

Many millions of people use this every day. A few web tourists. An awful lot of students, and professionals, and people wanting to figure all kinds of things out. It’s kind of nice to see how few of the queries we get are things that you can just search for on the web. People are asking us fresh, new, questions whose answers have never been written down before. So the only way to get those answers would be to find a human expert to ask—or to have Wolfram|Alpha compute them. It’s a huge project that I personally expect to keep working on forever.

It’s fascinating of course. Combining all these different areas of human knowledge. Figuring out things like how to curate and make computable human anatomy, or the 3 million or so theorems that exist in the literature of mathematics. I’m quite proud of how far we’ve got already, and how much faster we’re getting at doing things.

![Wolfram|Alpha examples Wolfram|Alpha examples]()

And, you know, it’s not just about public knowledge. We’re also now able to bring in uploaded material, and use our algorithms and knowledge to analyze it. We can bring in a picture. And Wolfram|Alpha will tell us things about it.

![Image upload with Wolfram|Alpha Pro Image upload with Wolfram|Alpha Pro]()

And we could explicitly tell Wolfram|Alpha to do some image computation. It works really nicely on a phone. Or we could upload a spreadsheet. And Wolfram|Alpha can use its linguistics to decode what’s in it, and then automatically generate a report about what’s interesting in the data.

Or we could get data from some internal database and ask natural language questions about it. And get custom reports automatically generated that can use external data as well as internal data. It’s incredibly powerful. And actually we have quite a business going building custom versions of Wolfram|Alpha for companies and other organizations.

It’s gradually getting more and more automated, and actually we’re planning to spin off a company specifically to do this kind of thing.

And you know, given the Wolfram|Alpha technology stack, there are so many places to go. Like having Wolfram|Alpha not just generate information, but actually do things too. You tell it something in natural language. And it uses algorithms and knowledge to figure out what to do.

Here’s a sophisticated case. As part of our high-end business, last year we released Wolfram SystemModeler.

![Wolfram SystemModeler Wolfram SystemModeler]()

Which is a tool for letting one design and simulate complex devices with tens of thousands of components. Like airplanes or turbines. Well, hooking this up to Wolfram|Alpha, we’ll be able to just ask questions to Wolfram|Alpha, and have it go to SystemModeler to automatically simulate a device, and then figure out how to do something.

![Wolfram SystemModeler Wolfram SystemModeler]()

Here’s a different direction: set Wolfram|Alpha loose on something like a document, where it can use our natural language technology to automatically add computation.

You know, today Wolfram|Alpha operates as an on-demand system: you say something to it, and it’ll respond. But in the future, it’s increasingly going to be used in a preemptive way. It’s going to sense or see something, and it’s automatically going to show you what it thinks you should know. Right now, the main issue that we see in people using Wolfram|Alpha is that they don’t understand all the things it can do. But in this preemptive mode, there’s no issue with that kind of discovery. Wolfram|Alpha is just going to automatically be figuring out what to show people. And once the hardware for augmented reality is there, this is going to be really neat. I mean, within Mathematica we now have what I think is the world’s most powerful image computation system. And combining this with Wolfram|Alpha capabilities, we’re going to be able to do a lot.

![Wolfram Mathematica 9 Wolfram Mathematica 9]()

I mentioned Mathematica here. It’s sort of our secret weapon. It’s how we’ve managed to do everything we’ve done. Including build that outrageously complex thing that is Wolfram|Alpha. Many of you I hope have heard of Mathematica. This June it’ll be the 25th anniversary of the original release of Mathematica. And I’m proud of how many inventions and discoveries have now been made in the world using Mathematica over that period of time. As well as how many students have been educated with it.

You know, I originally built Mathematica for a kind of selfish reason: I wanted to have it myself. And my goal was to make it broad enough that it could handle sort of any kind of computation I’d ever want to do. My approach was kind of a typical natural-science one. Think about all those different kinds of computations, drill down and try to understand the primitives that lie beneath them, and then implement those primitives in the system. And in a sense my plan was ultimately just to implement anything systematic and algorithmic that could be implemented.

Now I had a very important principle right from the beginning: as the system grew, it must always remain consistent and unified. Every new capability that was added must coherently fit into the structure of the system. And it was a huge amount of work to maintain that kind of design discipline. But I have to say that particularly in the last 10 years or so, it’s unbelievably paid off. Certainly it’s important in letting people learn what’s now a very big system. But even more important is that it’s allowed us to have a very powerful kind of recursive development process, in which anything we add now can “for free” use those huge blocks of functionality that we’ve already built.

The result is that we’ve been covering huge algorithmic areas incredibly fast, and with much more powerful algorithms than have ever been possible before. Actually, a lot of the time we’re really building not just algorithms, but meta-algorithms. Because another big principle we have is that everything should be as automated as possible.

You as a human want to just tell Mathematica what task you’re trying to perform. And there might be 200 different algorithms that could in principle be used. But it’s up to Mathematica to figure out automatically what the best one is. Internally, Mathematica is using very sophisticated algorithms—many of which we’ve invented. But the great thing is that a user doesn’t have to know anything about the details; that’s all handled automatically.

You know, Mathematica has by far the largest set of interconnected algorithmic capabilities that’s ever existed. And it’s not just algorithms that are built in; it’s also knowledge. Because all the knowledge in Wolfram|Alpha is directly accessible, and progressively more closely integrated, in Mathematica. It’s really quite a transformational thing. I call it knowledge-based computing. Whether you’re using the Wolfram|Alpha API or Mathematica, you’re able to do computing in which you can in effect start from the knowledge of the world, and then build from there.

I have to say that I’ve increasingly realized that Mathematica has been rather undersold. People think of it as that great tool for doing math. Which it certainly is. But it’s so much more than that. It was designed that way from the beginning, and as the years go by “math” becomes a smaller and smaller fraction of what the capabilities of Mathematica are about.

Really there are several parts to Mathematica. The most fundamental is the language that Mathematica embodies. It’s ultimately based on the idea that everything can be represented as a symbolic expression. Whether it’s an array of data, an image, a document, a program, an interface, whatever. This is an idea that I had more than 25 years ago—and over the years I’ve gradually realized just how powerful it is: having a small set of primitives that can seamlessly handle all those different kinds of things, and that provides in a sense an elegant “fusion” of many popular modern programming paradigms.

In addition to the symbolic character of the language, there’s another key point. Essentially every other computer language has just a small set of built-in operations. Yes, it has all sorts of mechanisms for handling in a sense the “infrastructure” of programming. But when it comes to algorithms and so on, there’s very little there. Maybe there are libraries, but they’re not unified, and they’re not really part of the language. Well, the point in our language is that all those algorithms are actually built right into the language. And that’s not all, there’s actual knowledge and data also built into the language.

It’s really a new kind of language. Something very different than others. And something incredibly productive for people who use it. But I have to say, in a sense I think it’s been rather hidden all these years. Not that there aren’t millions of people using the language through Mathematica. But there really should be a lot more—including lots who won’t be caught dead doing anything that anyone might think had “math” in it.

Really anyone who’s doing anything algorithmic or computational should be using it. Because it’s inevitably just much more efficient than anything else—because it has so much already built in. So one of the new things that we’re doing is to break out the language that Mathematica is based on, and give it a separate life. We’ve been thinking about this for more than 20 years. But now it’s finally going to happen.

We agonized for a long time about what to call the language. We came up with all kinds of names—clever, whimsical, whatever—and actually just recently on my blog I asked people for their comments and suggestions. And I suppose the result was a little embarrassing. Because after all the effort we put it in, by far the most common response about the name we should use is the most obvious and straightforward one. We should call it the Wolfram Language.

So that’s what it’ll be. The language we’ve built for Mathematica, with that huge network of built-in algorithms and knowledge, will be called the Wolfram Language. It’ll use .wolf files, and of course that means its icon has to be something like this:

![Wolfram Language logo Wolfram Language logo]()

What’s going to happen with this language? Well, here’s where things really get interesting. The language was originally built for the desktop platform that’s the current way most people use Mathematica. But in Wolfram|Alpha, for example, the language is running on a large scale in the cloud. And what’s going to be happening over the next few months is that we’ll be releasing a full cloud version. And not only that, there’ll also be a version running locally on mobile, first under iOS.

Why is that important? Well, it really opens up the language, both its use and its deployment. So, for example, we’re going to have the Wolfram Programming Cloud, in which you can freely write code in the language—anything from a pithy one-liner to something giant—right there in the cloud. And then immediately deploy in all sorts of ways.

If you wanted, you could just run it in an interactive session, like in standard Mathematica. But you can also generate an instant API. That you can call from anywhere, to just seamlessly run code in our cloud. Or you can embed the code in a page, or have the code just run in the background, periodically generating reports or whatever. And then you can take the exact same code, and deploy it on mobile too.

Now something else that we’ve built and refined over the years in Mathematica is our dynamic interface, that uses symbolic expressions to represent controls and interactivity. Not every use of the Wolfram Language uses that interface. But what’s happening is that we’re reinterpreting the interface to optimize it not just for the desktop, but also for the cloud and for mobile.

One place the interface is used big time is in what we call “CDF“: our computable document format. We introduced this a couple of years ago. Underneath it’s Wolfram Language code. On top, it’s a dynamic interactive interface that one can use to make reports and presentations and interactive documents of any kind. Right now, they can be in a plugin in a browser, or they can be standalone on a desktop. What’s happening now is that they can also be on mobile, or, with cloud CDF, they can operate in a pure web page, with no plugin, but just sending every computation to the cloud.

It might sound a bit abstract here. But I think the whole deployment of the Wolfram Language is going to be quite a revolution in programming. There’ve been seeds of this in Mathematica for a quarter of a century. But it’s a kind of convergence of cloud and mobile technology—and frankly our own understanding of the power of what we have—that’s making all this happen now.

You know, the fact that it’s so easy to get so much done in the language is not only important for professional programmers; it’s also really important for kids and anyone else who’s learning to program. Because you don’t have to type much in, and you’re immediately doing serious stuff. And, by the way, you get to learn all those state-of-the-art programming and algorithm concepts right there. And also: there’s an on-ramp that’s easier than anyone’s ever had before, with free-form natural language courtesy of the Wolfram|Alpha engine. It really seems to work very well for this purpose—as we’ve seen in our Mathematica Summer Camp for high-school kids, and our new after-school initiative for middle-school kids.

Maybe I should actually show a demo of all this stuff.

![CountryData["SouthAmerica"] CountryData["SouthAmerica"]]()

![{Argentina, Bolivia, Brazil, Chile, Colombia, Ecuador, FalklandIslands, FrenchGuiana, Guyana, Paraguay, Peru, Suriname, Uruguay, Venezuela} {Argentina, Bolivia, Brazil, Chile, Colombia, Ecuador, FalklandIslands, FrenchGuiana, Guyana, Paraguay, Peru, Suriname, Uruguay, Venezuela}]()

![CountryData[#, "Flag"] & /@ % CountryData[#, "Flag"] & /@ %]()

![Flags of South American countries Flags of South American countries]()

![EdgeDetect /@ % EdgeDetect /@ %]()

![Edges of flags of South American countries Edges of flags of South American countries]()

There is a whole mechanism for deploying these dynamic things using CDF.

One application area that’s fun—and topical these days—is using algorithmic processes to make things that one can 3D-print.

![3D printing example 3D printing example]()

That was the Wolfram Language on the desktop, and CDF. Here it is in the Programming Cloud.

![Wolfram Programming Cloud Wolfram Programming Cloud]()

That’s cloud CDF. This also works on iOS, though the controls look a bit different.

In the next little while, you’ll be seeing a variety of platforms based on our technology. The Document Platform, for creating CDF documents, in the cloud or elsewhere. The Presentation Platform, for creating full computable interactive presentations. The Discovery Platform, optimized for the workflow of discovering things with our technologies.

Many of these involve not just the pure language, but also CDF and our dynamic interface technology. But one important thing that’s just happening now is that the Wolfram Language, with all its capabilities, is starting to fit in some very cheap hardware. Like Raspberry Pi. For years if you wanted to embed algorithms into some device, you’d have to carefully compile them into some low-level language or some such. But here’s the great thing: for the first time, this year, embeddable processors are powerful enough that you can just run the whole Wolfram Language, right on them. So you can be doing your image processing, or your control theory computation, right there, with all the power of everything we’ve built in Mathematica.

By the way, I might say something about devices. The whole landscape of sensors and devices is changing, with everything getting more diverse and more ubiquitous. And one important thing we’re doing is making a general Connections Hub for sensors and devices. In effect we’re curating sensors and devices, and working with lots of manufacturers. So that the data that comes from their systems can seamlessly flow into Wolfram|Alpha, or into anything based on the Wolfram Language. We’re building a generic analytics system that anyone can plug into. It can be used in a fully automatic way, like in Wolfram|Alpha Pro. And it can be arbitrarily customized and programmed, using the Wolfram Language.

By the way, another component of this, primarily for researchers, is that we’re building a general Data Repository. What’s neat here is that because of our Wolfram|Alpha linguistic capabilities, we can automatically read and align data. And then of course we can do analysis. When you read a research paper today, if you’re lucky there’ll be some URL listed where you can find data in some raw form. But with our Data Repository people are going to be able to have genuinely “data-backed papers”. Where anyone can immediately do comparisons or new analysis.

Talking of data, I’ve been a big collector of it personally for a long time. Last year here I showed for the first time some of my nearly 25-year time series of personal analytics data. Here’s the new version.

![Plot of every email sent Plot of every email sent]()

That’s every email I sent, including this year.

![Plot of keystrokes Plot of keystrokes]()

That’s keystrokes.

![Daily rhythms Daily rhythms]()

And that’s my whole average daily rhythm over the past year.

Oh, and here’s something useful I built actually right after South by Southwest last year, that I was embarrassed I didn’t have before: the time series of the number of pending and unanswered emails I have. (It’s computing in real time here in our cloud platform.)

![Time series of the number of pending and unanswered emails over the last 30 days Time series of the number of pending and unanswered emails over the last 30 days]()

It’s sort of a proxy for busyness level. Which is pretty useful in managing my schedule and so on.

Well, bizarre as it seems to me, I may be the human who’s ended up collecting the most long-term data on themselves of anyone.

But nowadays everyone’s got lots of data on themselves. Like on Facebook, for example. And so in Wolfram|Alpha we recently released Personal Analytics for Facebook. It’ll be coming out in an app soon too. So you can just go to Wolfram|Alpha and ask for a Facebook report, and it’ll generate actually a whole little book about you, combining analysis of your Facebook data with public computational knowledge.

My personal Facebook is a mess, but here’s what the system does on it:

![Stephen Wolfram's Facebook report Stephen Wolfram's Facebook report]()

When we first released our Personal Analytics for Facebook we were absolutely draconian not keeping any data. And no doubt we destroyed some great sociometric science in the making. But a month or so ago we started keeping some anonymized data, and started a Data Donor program, which has been very successful. So now we can explore quite a few things. Like here are a few friend graphs.

![Facebook friend graphs Facebook friend graphs]()

There’s a huge diversity. Each one tells a story. Both about personality and circumstances.

But let’s look at some aggregate information. Like here’s the distribution of the number of friends that people have.

![Distribution of the number of Facbeook friends that people have Distribution of the number of Facbeook friends that people have]()

Like this shows the distributions of ages of friends for a person of a particular age.

![Distribution of ages of friends for a person of a particular age Distribution of ages of friends for a person of a particular age]()

The distribution gets broader with age. Actually, after about age 25, there’s some sort of new law of nature one discovers: that at any age about half of people’s friends are between 25% younger and 25% older.

By the way, in Mathematica and the Wolfram Language there’s also now direct access to social media data for Facebook, LinkedIn, Twitter and so on. So you can do all kinds of interesting analysis and visualization.

Actually, talking of Personal Analytics, here’s a new dimension. I’ve been walking around South by Southwest for a couple of days wearing this cute Memoto camera, which takes a picture every 30 seconds. And last night my 14-year-old was kind enough to write a bit of code to analyze what I got. Here’s what he came up with.

![Memoto camera data Memoto camera data]()

You know, it’s pretty neat to see how our big technology stack makes all this possible. I mean, even just to read stuff properly from Facebook we’ve got to be able understand free-form input. Which of course we can with the Wolfram|Alpha Engine. And then to say interesting things we’ve got to use knowledge and algorithms. Then we’ve got to have good automated visualization. And it helps to have state-of-the-art large-graph-manipulation algorithms in the Wolfram Language Engine. And also to have CDF and our Dynamic Interface to generate complete reports.

To me it’s exciting—if a little overwhelming—to see how many things can be moved forward with our technology stack. One big one is education. Of course Wolfram|Alpha and Mathematica are extremely widely used—and well known—in education. And they’re used as central tools in endless courses and so on.

But with our upcoming Cloud Platform lots of new things are going to become possible. And as my way to understand that, I’ve decided it’s time for me to actually make a course or two myself. You know, I was a professor once, before I was a CEO. But it’s been 25 years. Still, I decided the first course to do was one on Data Science. An Introduction to Data Science. I’m having a great time.

![Data Science Course example Data Science Course example]()

Data Science is a terrific topic. Really in the modern world everyone should learn it. It’s both immediately useful, and a great way to teach programming, as well as general computational and quantitative thinking.

Between our Cloud Platform and the Wolfram Language, we have a great way to set up the actual course. Here’s the basic setup. Below the video there’s a window where you can just immediately play with all the code that’s shown. And because it’s just very high-level Wolfram Language code it’s realistic to learn in effect just by immersion.

And when it comes to setting up exercises and so on, it’s pretty interesting when you have Wolfram|Alpha-style natural language understanding capabilities and so on. I hope the Data Science will be ready to test in a limited number of months. And, needless to say, it’s all being built with a very automated authoring system, that’ll allow lots of people to make courses like this. I’m thinking about trying to do a math course, for example.

We get asked a lot about math education a lot, of course. And actually we have a non-profit spinoff called Computer-Based Math that’s been trying to create what we see as being a modern computer-informed math curriculum. You see, the current math curriculum was mostly set a century ago, when the world was very different. Two things have changed today: first, we’ve got computers that can automate the mechanical doing of math. And second, there are lots of new and different ways that math gets used in the world at large.

![Computer-Based Math Computer-Based Math]()

It’s going to be a long process modernizing math education, around the world. We’d been wondering what the first country really to commit to Computer-Based Math would be. Turns out it’s Estonia, which signed up a few weeks ago.

So we’re slowly moving toward people being educated in the kind of computational paradigm. Which is good, because the way I see it, computation is going to become central to almost every field. Let’s talk about two examples—classic professions: law and medicine. It’s funny, when Leibniz was first thinking about computation at the end of the 1600s, the thing he wanted to do was to build a machine that would effectively answer legal questions. It was too early then. But now we’re almost ready, I think, for computational law. Where for example contracts become computational. They explicitly become algorithms that decide what’s possible and what’s not.

You know, some pieces of this have already happened. Like with financial derivatives, like options and futures. In the past these used to just be natural language contracts. But then they got codified and parametrized. So they’re really just algorithms, which of course one can do meta-computations on, which is what has launched a thousand hedge funds, and so on.

Well, eventually one’s going to be able to make computational all sorts of legal things, from mortgages to tax codes to perhaps even patents. Now to actually achieve that, one has to have ways to represent many aspects of the real world, in all its messiness. Which is what the whole knowledge-based computing of Wolfram|Alpha is about.

![sore throat + cough sore throat + cough]()

How about medicine? To me probably the single most important short-term target in medicine is diagnosis. If you get a diagnosis wrong—and an awful lot are wrong in practice—then all the effort and money you spend is going to be wasted, and is often even going to be harmful. Now diagnosis is a difficult thing for humans. And as more is discovered in medicine—and medicine gets more specialized—it gets even more difficult. But I suspect that in fact diagnosis is in some sense not so hard for computers. But it’s a big project to make a credible automated diagnosis system. Because you have to cover everything: it’s no good just doing one particular kind of disease, because then all you’re going to do is say that everyone has it.

By the way, the whole area of diagnosis is about to change—as a result of the arrival of sensor-based medicine. It used to be that you could ask a question or do a test, and the result would be one bit, or one number. But now it’s routine to be able to get lots and lots of data. And if we’re really going to use that data, we’ve got to use computers; humans just don’t deal with that kind of thing. It’s an ambitious project with many pieces, but I think that using our technology stack—and some ideas from science I’ve developed—we know how to do automated medical diagnosis. And we’re actually spinning off a company to do this.

You know, it’s interesting to think about the broad theory of diagnosis. And I think an interesting model for medical diagnosis is software diagnosis—figuring out what’s going wrong with a large running software system. In medicine we have all these standard diagnosis codes. For an operating system one might imagine having things like “diseases of the memory management system” or “diseases of the keyboard driver”. In medicine, we’re starting to be able to measure more and more. But in software we can in principle monitor almost everything. But we need methodologies to interpret what we’re seeing.

By the way, even though I think diagnosis is in the short term a critical point in medicine, I think in the long term it’s simply going to go away. In fact, from my science—as well as the software analogy—I think it’s clear that the idea of discrete diseases is just wrong. Of course, today we have just a few thousand drugs and surgeries we can use. But I think more and more we’ll be using algorithmic treatments. Whether it’s medical devices that behave according to algorithms, or whether it’s even programmable drugs that effectively do a computation at the molecular scale to work out how to act. And once the treatments are algorithmic, we’re really going to want to go directly from data on symptoms to working out the treatment, often adaptively in real time.

My guess is it’s going to end up a bit like a financial portfolio. You watch what the stocks do, and you have algorithms to decide how to respond. And you don’t really need to have a verbal description—like the technical trader’s “head and shoulders” pattern or something—of what the stock chart is doing.

By the way, when you start thinking about medicine in fundamentally computational terms, it gives you a different view of human mortality. It’s like the operating system that’s running, and over the course of time has various kinds of trauma and infections, starts running slower, and eventually crashes, and dies. If we’re going to avoid mortality, we need to understand how to intervene to keep the operating system—or the human—up and running. There are lots of interim steps. Taking over more and more biological functions with technology. And figuring out how to reprogram pieces of the molecular machine that is our body. And figuring out if necessary how to “hit the pause button” to freeze things, presumably with cryonics.

By the way, it’s bizarre how few people work on this. Because I’m sure that, just like cloning, there’s just going to be a wacky procedure that makes it possible—and once we know it, we’re just going to be able to do it quite routinely, and it’s going to be societally very important. But in the end, we want to solve the problem of keeping all the complexity that is a human running indefinitely. There are some fascinating basic science problems here. Connected to concepts like computational irreducibility, and a bit to the traditional halting problem. But I have no doubt that eventually it’ll be solved, and we’ll achieve effective human immortality. And when that happens I expect it’ll be the single biggest discontinuity in human history.

![Cellular automata Cellular automata]()

You know, as one thinks about such things, one can’t help wondering about the general future of the human condition. And here’s something someone like me definitely thinks about. I’m spending my life trying to automate things. Trying to make it possible to do automatically with computation things that humans used to have to do themselves.

Now, if we look at the arc of human history, the biggest systematic change through time is the arrival of more and more technology, and the automation of more and more kinds of tasks. So here’s a question: what if we succeed in automating everything? What will happen then? What will the humans do? There’s an ultimate—almost philosophical—version of this question. And there’s also a practical next-few-decades version.

Let’s start with the ultimate version. As we go on and build more and more technology, what will the end point be? We might assume that we could somehow go on forever, achieving more and more. But the Principle of Computational Equivalence tells us that we cannot. One we have reached a certain level, everything is already in a sense possible. And even though our current engineering has not yet reached this point, the Principle of Computational Equivalence also tells us that this maximal level of computational sophistication is not particularly rare. Indeed it happens in many places in the physical world, as well as in systems like simple cellular automata.

![Cellular automata Cellular automata]()

And it’s not too hard to see that as we improve our technology, getting down to the smallest scales, and removing everything that seems redundant, that we might wind up with something that looks just like a physical process that already happens in nature. So does this mean that in the ultimate future, with all that great automation and technology, all we’ll achieve is just to produce something that’s indistinguishable from zillions of things that already exist in nature?

In some sense, yes. It’s a sort of ultimate Copernicanism: not only is our Earth not the center of the universe, and our bodies not made of something physically unique. But also, what we can achieve and create with our intelligence is not in a fundamental sense different from what nature is already doing.

So is there any meaningful ultimate future for us? The answer is yes. But it’s not about doing some kind of scientific utopian thing, and achieving some ultimate perfect state that’s independent of our history. Rather, it’s about doing things that depend on all those messy details of us humans and our history.

Here’s a way to understand this. Imagine our technology has got us a complete AI sitting in a box on a desk. It can do all sorts of incredible things; all sorts of sophisticated computations. The question is: what will it choose to do? It has no intrinsic way to decide. It needs some kind of goal, some kind of purpose, imposed on it. And that’s where we humans and our history come in. I mean, for humans, there is again no absolute purpose abstractly defined. We get our notion of purpose from the details of our existence and our history. And to achieve ultimate technology is in a sense empty unless purposes are defined for it, and that’s where we humans come in.

We can begin to see this pretty well even right now. In the past, our technology was such that we typically had to define quite explicitly what systems we build should do, say by writing code that defines each step they should take. But today we’ve increasingly got much more capable systems, that can do all kinds of different things. And we interact with them in a sense by injecting purpose. We define a purpose or a goal, and then the system figures out how it can best achieve that goal.

Well, of course, human purposes have evolved quite a bit over the course of human history. And often their evolution is connected to the arrival of technology that makes more things possible. So it’s not too clear what the limit of this kind of co-evolving system will be, and whether it will turn out to be wonderful or terrible. But in the nearer term, we can ask what effect increasing automation will have on people and society. And actually, as I was thinking about this recently, I thought I’d pull together some data about what’s happened with this historically. So here are some plots over the past 150 years of what fractions of people in the US have been in different kinds of occupations. Blue for males; pink for females.

![Fractions of people in the US who have been in different kinds of occupations over the last 150 years Fractions of people in the US who have been in different kinds of occupations over the last 150 years]()

There are lots of interesting details here, like the pretty obvious direct and indirect effects of larger government over the last 50 years. But there’s also a clear signature of automation, with a variety of kinds of occupations simply going away. And this will continue. And indeed my expectation is that over the coming years a remarkable fraction of today’s occupations will successfully be automated. In the past, there’ve always been new occupations that took the place of ones that were automated away. And my guess, or perhaps hope, is that for most people some hybrid of avocation and occupation will emerge.

Which brings me to something I’ve been thinking about quite a lot recently. I’m mostly a science, technology and ideas guy. But I happen also to be very interested in people. And over the years I’ve had the good fortune to work with—and mentor—a great many very talented people. But here’s something I’ve noticed. Many people—and young people in particular—have an incredibly difficult time picking a good occupation—or avocation—for themselves. It’s a bit of a puzzle. People have certain sets of talents and interests. And there are certain niches that exist in the world at any given time. The problem is to match a given person with a niche.

Now sometimes people—and I was an example—pick out a pretty clear niche by the time they’re early teenagers. But an awful lot of people don’t. Usually there are two problems. First, people don’t really identify their skills and interests. And second, people don’t know what’s possible to do in the world. And in the end, an awful lot of people pick directions—almost at random—that aren’t in fact very good for them. And I suspect in terms of wasted resources in the world, this is pretty high up there.

You know, I have a kind of optimistic theory—that’s supported by a lot of personal observation—that for almost every person, there’s at least one really good thing they could be doing, that they will find really fulfilling. They may be lucky or unlucky about what value the world places on that thing at a given time in history. But if they can find that thing—and it often isn’t so easy—then it’s great.

Well, needless to say, I’ve been thinking what can be done. I’ve personally worked on the problem many times. With many great results. Although I have to say that almost always I’ve been dealing with highly capable individuals in good circumstances. And I do want to figure out how to generalize, to younger folk and less good circumstances. But whatever happens, there’s a puzzle to solve. A little like medical diagnosis. Requiring understanding the current situation. Then knowing what’s possible. And one of the practical challenges is knowing enough about how the world is evolving, and what new occupations and ways to operate in the world are emerging.

I’m hoping to do more in this direction. I’m also thinking a bunch about the structure of education. If people have an idea what they might like to do, how do they develop in that direction? The current system with college and so on is pretty inflexible. But I think there are better alternatives, that involve effectively doing diverse mentored projects. Which is something we’ve seen very successfully in the summer schools we’ve done over the past decade.

But anyway, with all this discussion about what people should do: that’s a big challenge for someone like me too. Because I’m in this situation where I’ve been building things for 30 years, and now there are just an absurd number of things that what I’ve built makes possible. We’re pursuing a lot of things at our company. But we only have 700 people, which isn’t enough for everything we want to do. I made a decision long ago to have a simple private company, so we could concentrate on the long term, and on what we really wanted to do. And I’m happy to say that for the last quarter century that’s worked out very well. And it’s made possible things like Wolfram|Alpha—that probably nobody but me would ever have been crazy enough to put money into.

But now we’ve just got too many opportunities, and I’ve decided we’re just leaving too many great ideas—and great technology prototypes—on the table. So we’ve been learning how to spin off companies to develop these things. And actually, we have a whole scheme now for setting up an outside fund to invest in spinoffs that we’re doing.

I’ve been used to architecting technical systems. But architecting these kinds of business structures is also pretty interesting. Sort of trying to extend the machine I’ve built for turning ideas into reality. You know, I like to operate by having a whole portfolio of long-range ideas. Which I carry around with me for a long time. Like for Wolfram|Alpha it was more than 30 years. Gradually waiting for the circumstances and the right time to pursue them. And as I said earlier, I would probably be doing my physics project now, if technology opportunities hadn’t got in the way.

Though I have to say that the architecture of that project is tricky too. Because it’s not clear how to fit it into the world. I mean, lots of people, including myself, are incredibly curious about it. But for the physics community it’s a scary, paradigm-breaking, proposition. And it’s going to be an uphill story there.

And the issue for someone like me is: how much does the world really want something like the fundamental theory of physics done? It’s always great feedback for me doing projects where people really like the results. I don’t know about this one. I’ve been thinking about trying to find out by putting up a Kickstarter project or something for finding the fundamental theory of physics. It’s kind of funny how one goes from that level of practicality, to thinking about the structure of our whole universe. It’s fun—and to me—it’s invigorating.

Well, there are lots more things it’d be fun to talk about. But let me stop here, and hope that you’ve enjoyed hearing a little about what’s going on these days in my small corner of the world.

The plots above seem to support the idea that “life’s complicated”. But if one aggregates the data a bit, it’s easy to end up with plots that seem like they could just be the result of some simple physics experiment. Like here’s the distribution of the number of emails I’ve sent per day since 1989:

The plots above seem to support the idea that “life’s complicated”. But if one aggregates the data a bit, it’s easy to end up with plots that seem like they could just be the result of some simple physics experiment. Like here’s the distribution of the number of emails I’ve sent per day since 1989:

I was curious just how strong this correlation is: in effect just how scheduled all those calls are. And looking at the data I found that at least for my external phone meetings at least half of them do indeed start within 2 minutes of their appointed times. For internal meetings—which tend to involve more people, and which I normally have scheduled back-to-back—there’s a somewhat broader distribution, shown on the left.

I was curious just how strong this correlation is: in effect just how scheduled all those calls are. And looking at the data I found that at least for my external phone meetings at least half of them do indeed start within 2 minutes of their appointed times. For internal meetings—which tend to involve more people, and which I normally have scheduled back-to-back—there’s a somewhat broader distribution, shown on the left. When one looks at the distribution of call durations one sees a kind of “physics-like” background shape, but on top of that there’s the “obviously human” peak at the 1-hour mark, associated with meetings that are scheduled to be an hour long.

When one looks at the distribution of call durations one sees a kind of “physics-like” background shape, but on top of that there’s the “obviously human” peak at the 1-hour mark, associated with meetings that are scheduled to be an hour long.

When the book came out, I had all the reviews we could find diligently archived. And I always intended at some point to systematically read them. But somehow a decade has gone by, and I have not done so. And as I write this post, I have on my desk a daunting pile of printed copies of reviews, as thick as the book itself. But back when they were archived, for reasons I don’t now know, each review was at least put into a “star-rating” category, which we can now use to make a pie chart. And while I’m not sure just how much these statistics really mean, it is perhaps interesting that positive—or at least neutral—reviews overall significantly outweighed negative ones.

When the book came out, I had all the reviews we could find diligently archived. And I always intended at some point to systematically read them. But somehow a decade has gone by, and I have not done so. And as I write this post, I have on my desk a daunting pile of printed copies of reviews, as thick as the book itself. But back when they were archived, for reasons I don’t now know, each review was at least put into a “star-rating” category, which we can now use to make a pie chart. And while I’m not sure just how much these statistics really mean, it is perhaps interesting that positive—or at least neutral—reviews overall significantly outweighed negative ones.

![ArduinoAnalogRead[0] ArduinoAnalogRead[0]](http://blog.stephenwolfram.com/data/uploads/2012/10/In-1x.png)

![Dynamic[AngularGuage[ArduinoAnalogRead[0], {0, 1023}], UpdateInterval -> 0] Dynamic[AngularGuage[ArduinoAnalogRead[0], {0, 1023}], UpdateInterval -> 0]](http://blog.stephenwolfram.com/data/uploads/2012/10/In-2.png)

![data = {}; Dynamic[rawdata = ArduinoAnalogRead[0]; AppendTo[data, rawdata]; ListLinePlot[data,Filling -> Axis, ImageSize -> 500], UpdateInterval -> 0] data = {}; Dynamic[rawdata = ArduinoAnalogRead[0]; AppendTo[data, rawdata]; ListLinePlot[data,Filling -> Axis, ImageSize -> 500], UpdateInterval -> 0]](http://blog.stephenwolfram.com/data/uploads/2012/10/In-3.png)

![ARDroneFlyPathGraphics[Table{Sin[u], Sin[2u], {u, 0, 2π, π/5}]] ARDroneFlyPathGraphics[Table{Sin[u], Sin[2u], {u, 0, 2π, π/5}]]](http://blog.stephenwolfram.com/data/uploads/2012/10/in4x.png)

![CountryData["SouthAmerica"] CountryData["SouthAmerica"]](http://blog.stephenwolfram.com/data/uploads/2013/03/SXSW-In11.png)

![CountryData[#, "Flag"] & /@ % CountryData[#, "Flag"] & /@ %](http://blog.stephenwolfram.com/data/uploads/2013/03/SXSW-In21.png)

![Example of Leibniz writing down an infinite series for Sqrt[2] Example of Leibniz writing down an infinite series for Sqrt[2]](http://blog.stephenwolfram.com/data/uploads/2013/05/2-a.png)